DeepSeek’s R1, launched in January 2025, has disrupted the AI landscape with its open-source model and unprecedented cost-efficiency, topping Apple’s App Store and challenging industry giants. Meanwhile, OpenAI, the pioneer behind ChatGPT and o1 model, continues to dominate with its proprietary, enterprise-grade models renowned for robust reasoning and polished performance. But which AI model truly stands out?

This blog dives deep into the DeepSeek vs. OpenAI rivalry, comparing their performance, cost, customization, applications, and more. We’ll explore unique factors like community support, scalability, environmental impact, and future potential. Whether you’re a developer, business leader, or AI enthusiast, this guide will help you decide which model best fits your needs.

Let’s get started!

An Overview of DeepSeek vs. OpenAI

DeepSeek: The Open-Source Disruptor

A Chinese AI startup that disrupted the industry after the release of R1 in January 2025, a model trained for just $5.58 million. Its open-source AI backed by an MIT license, allows developers to freely access and modify its weights, fostering innovation. R1’s lightweight architecture, with 37 billion parameters, delivers impressive performance, particularly in technical and regional tasks like Chinese-language processing. Its rapid adoption, surpassing ChatGPT on the App Store, signals a shift toward accessible AI.

OpenAI: The Proprietary Powerhouse

OpenAI, founded by Elon Musk and others, has shaped modern AI with models like GPT-4o and o1. Known for massive training investments (over $100 million for GPT-4), its proprietary models prioritize enterprise applications, offering unmatched reasoning and safety features. With 671 billion parameters, o1 delivers polished outputs through a subscription-based API, catering to businesses that prioritize reliability. A mature ecosystem and extensive documentation further strengthen its position, though these advantages come at a premium cost.

Key Takeaway: DeepSeek’s open-source affordability challenges OpenAI’s costly, controlled dominance, setting the stage for a nuanced comparison.

Performance Comparison

Performance is a critical battleground for AI models. When it comes to real-world capabilities, DeepSeek vs. OpenAI is all about finding out which model is more precise, efficient, and intelligent.

Mathematical Reasoning

DeepSeek-R1 shines in mathematical tasks, scoring 97.3% on the MATH-500 benchmark, slightly edging out OpenAI o1 at 96.4%. This near-parity is remarkable given R1’s lower resource demands, making it a go-to for academic and technical applications requiring precise calculations.

Coding Proficiency

In coding, DeepSeek-R1 outperforms OpenAI-o1 in niche areas. However, the latter surpassed others by achieving a 91% success rate on the Kotlin_HumanEval benchmark. Its ability to generate accurate Kotlin code and explain complex logic makes it a favorite among developers. However, R1’s slower processing speed limits its real-time coding applications compared to OpenAI models, which excel in dynamic environments.

Reasoning and Problem-Solving

o1 takes the lead in complex reasoning, excelling in logical analysis and creative problem-solving. On the GSM8K benchmark, o1 consistently outperforms R1, delivering coherent and contextually rich solutions. This makes it ideal for tasks requiring nuanced judgment, such as strategic planning or content creation.

Speed and Latency

Speed is a pain point for DeepSeek. R1’s latency, particularly in coding tasks, lags behind OpenAI’s models, which are optimized for real-time applications. For businesses needing instant responses, its infrastructure provides a clear advantage.

Key Takeaway: DeepSeek-R1 excels in math and coding precision, while o1 dominates in reasoning and speed, catering to different priorities.

Cost and Accessibility

Cost is a defining factor in the DeepSeek vs. OpenAI debate. Here are some stark contrasts.

DeepSeek’s Affordability

DeepSeek-R1 is trained for $5.58 million and uses 2.78 million GPU hours while it operates at $0.55 per million input tokens. Its open-source model, free under the MIT license, eliminates subscription costs, making it accessible to startups, researchers, and small teams. This affordability democratizes AI innovation, especially for resource-constrained projects.

OpenAI’s Premium Pricing

Training costs exceed $6 billion for models like o1, with usage priced at $15 per million input tokens. Its subscription-based API, while user-friendly, targets enterprises with deep pockets.

Accessibility

DeepSeek’s open weights allow unrestricted commercial use, a boon for developers. OpenAI’s proprietary model, however, requires API access, limiting customization but simplifying integration. For businesses prioritizing ease, OpenAI’s ecosystem is unmatched.

Key Takeaway: DeepSeek’s low cost and open access appeal to budget-conscious users, while OpenAI’s premium model suits high-budget, enterprise needs.

Customization and Flexibility

Customization is critical for industry-specific applications.

DeepSeek’s Open-Source Flexibility

DeepSeek-R1’s open weights enable granular customization, allowing developers to tailor the model for niches like healthcare, e-commerce, or regional language processing. This flexibility is ideal for startups building bespoke solutions, such as medical diagnostics or localized chatbots. However, customization requires technical expertise, which may challenge less experienced teams.

OpenAI’s Streamlined Ecosystem

Proprietary models offer limited customization due to restricted access to weights. However, its API is designed for seamless integration, supporting rapid deployment in enterprise settings. Developers benefit from pre-built tools and extensive documentation, reducing the need for deep technical tweaks.

Key Takeaway: DeepSeek empowers developers with customization freedom, while OpenAI prioritizes ease of use for standardized applications.

Safety and Ethical Considerations

Safety is a growing concern in AI. It's crucial to examine the safety protocols and ethical implications that come with each model.

DeepSeek’s Limitations

DeepSeek-R1’s open-source nature raises safety concerns. Its limited bias detection and content moderation features make it riskier for public-facing applications. Allegations of mimicking OpenAI outputs (74% classified as OpenAI-written) also spark ethical debates about data transparency.

OpenAI’s Robust Protocols

Prioritizes safety, with advanced bias detection and content filtering in o1. These features ensure compliance with enterprise standards, making it suitable for sensitive applications like legal or financial services.

Key Takeaway: OpenAI’s safety features cater to regulated industries, while DeepSeek’s open model requires careful oversight.

Community and Developer Ecosystem

Beyond performance and pricing, the strength of an AI model often lies in its community support and developer ecosystem.

DeepSeek’s Grassroots Community

DeepSeek’s open-source model fosters a vibrant GitHub community, with global developers contributing plugins, tutorials, and optimizations. Forums like Reddit and Discord buzz with peer-driven support, though official documentation is sparse. This grassroots ecosystem accelerates innovation but may lack the polish of established platforms.

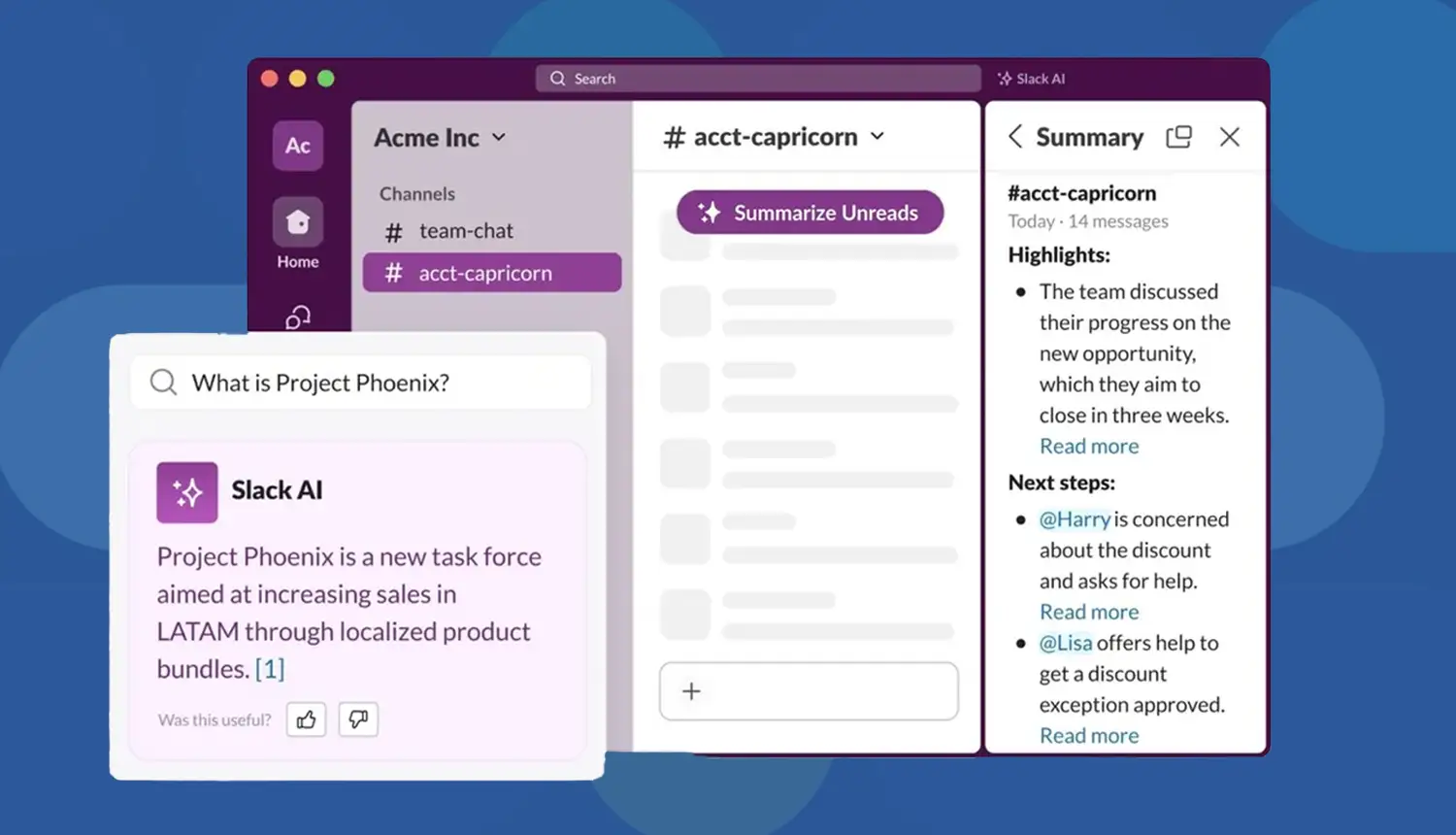

OpenAI’s Mature Ecosystem

The developer ecosystem is robust, with comprehensive documentation, tutorials, and third-party integrations (e.g., LangChain). Its forums and support channels cater to enterprises and hobbyists alike. However, proprietary restrictions limit collaborative innovation compared to DeepSeek’s open model.

Key Takeaway: DeepSeek’s community drives open innovation, while OpenAI’s ecosystem ensures reliability and support.

Scalability and Deployment

Scalability is vital for real-world deployment.

DeepSeek’s Lightweight Design

With 37 billion parameters, DeepSeek-R1 is optimized for smaller hardware, enabling deployment on edge devices or local servers. This makes it ideal for startups or projects with limited infrastructure, such as IoT applications or mobile AI.

OpenAI’s Enterprise Scale

OpenAI’s o1, with 671 billion parameters, demands cloud infrastructure, suited for high-throughput enterprise applications like global customer support or large-scale analytics. Its scalability comes at the cost of higher resource requirements.

Key Takeaway: DeepSeek’s lean architecture supports small-scale deployments, while OpenAI excels in large-scale environments.

Environmental Impact

Environmental considerations are increasingly relevant.

DeepSeek’s Efficiency

DeepSeek-R1’s training required 2.78 million GPU hours, significantly less than peers, reducing its carbon footprint (Analytics Vidhya). Its lightweight design further minimizes operational energy, aligning with green AI trends.

OpenAI’s Heavy Footprint

OpenAI’s models, with training estimates of 30.8 million GPU hours for similar scales, consume substantial energy (Analytics Vidhya). While the company invests in carbon offsets, its resource-intensive approach raises sustainability concerns.

Key Takeaway: DeepSeek’s efficiency supports eco-conscious AI, while OpenAI’s scale poses environmental challenges.

Future Roadmap and Innovation Potential

DeepSeek’s Open Innovation

DeepSeek plans multimodal capabilities (e.g., image processing) and expanded language support, leveraging its open-source community. This flexibility positions R1 as a platform for rapid, collaborative advancements.

OpenAI’s Proprietary Advances

Developing advanced reasoning models (e.g., o3 series) and deeper enterprise integrations. Its focus on proprietary innovation ensures cutting-edge features but limits community-driven growth.

Key Takeaway: DeepSeek’s open roadmap fosters collaboration, while OpenAI’s controlled advancements prioritize enterprise excellence.

Which Model Stands Out?

Choosing between DeepSeek and OpenAI depends on your priorities:

- DeepSeek-R1: Ideal for startups, researchers, and technical projects. Its affordability ($0.55 per million tokens), open-source flexibility, and niche strengths (math, coding, Chinese-language tasks) make it a disruptor. The growing community and eco-friendly design add long-term value, though safety and latency need improvement.

- OpenAI o1: Best for enterprises requiring robust reasoning, safety, and scalability. Its polished performance, mature ecosystem, and real-time efficiency justify the premium cost ($15 per million tokens). However, proprietary limits and environmental impact may deter some users.

Recommendation: Test both models for your use case. DeepSeek’s GitHub offers free access, while OpenAI’s API provides a trial. Leads for budget-conscious innovation, DeepSeek stands out; for enterprise-grade reliability.

DeepSeek vs. OpenAI: Timeline of Advancements

To truly understand the DeepSeek vs. OpenAI debate, let's walk through a timeline of their key advancements.

1. DeepSeek-V3: A High-Performance, Cost-Efficient LLM

- Architecture: 671B parameters with 37B activated per token, utilizing a Mixture-of-Experts (MoE) framework.

- Training Efficiency: Achieved training on 2,048 H800 GPUs over 55 days, costing approximately $5.6 million.

- Performance: Outperforms other open-source models and rivals top closed-source models like GPT-4 and Claude 3.5 Sonnet in various benchmarks.

2. DeepSeek-R1: Enhanced Reasoning via Reinforcement Learning

- Training Approach: Employs multi-stage training with reinforcement learning to boost reasoning capabilities.

- Variants: Includes DeepSeek-R1-Zero and DeepSeek-R1, with the latter addressing issues like readability and language mixing.

- Open-Source Commitment: Released multiple model sizes (1.5B to 70B parameters) to the research community.

3. DeepSeek-VL2: Advanced Multimodal Understanding

- Capabilities: Excels in visual question answering, OCR, document analysis, and visual grounding.

- Technical Innovations: Introduces dynamic tiling vision encoding and Multi-head Latent Attention for efficient processing.

- Model Variants: Offers Tiny (1.0B), Small (2.8B), and Base (4.5B) versions.

4. DeepSeek-Coder-V2: Elevating Code Intelligence

- Language Support: Expands to 338 programming languages with a context length of up to 128K tokens.

- Benchmark Performance: Surpasses GPT-4 Turbo, Claude 3 Opus, and Gemini 1.5 Pro in coding and math tasks.

- Training Data: Continued pre-training with an additional 6 trillion tokens enhances its capabilities.

OpenAI: Recent Developments

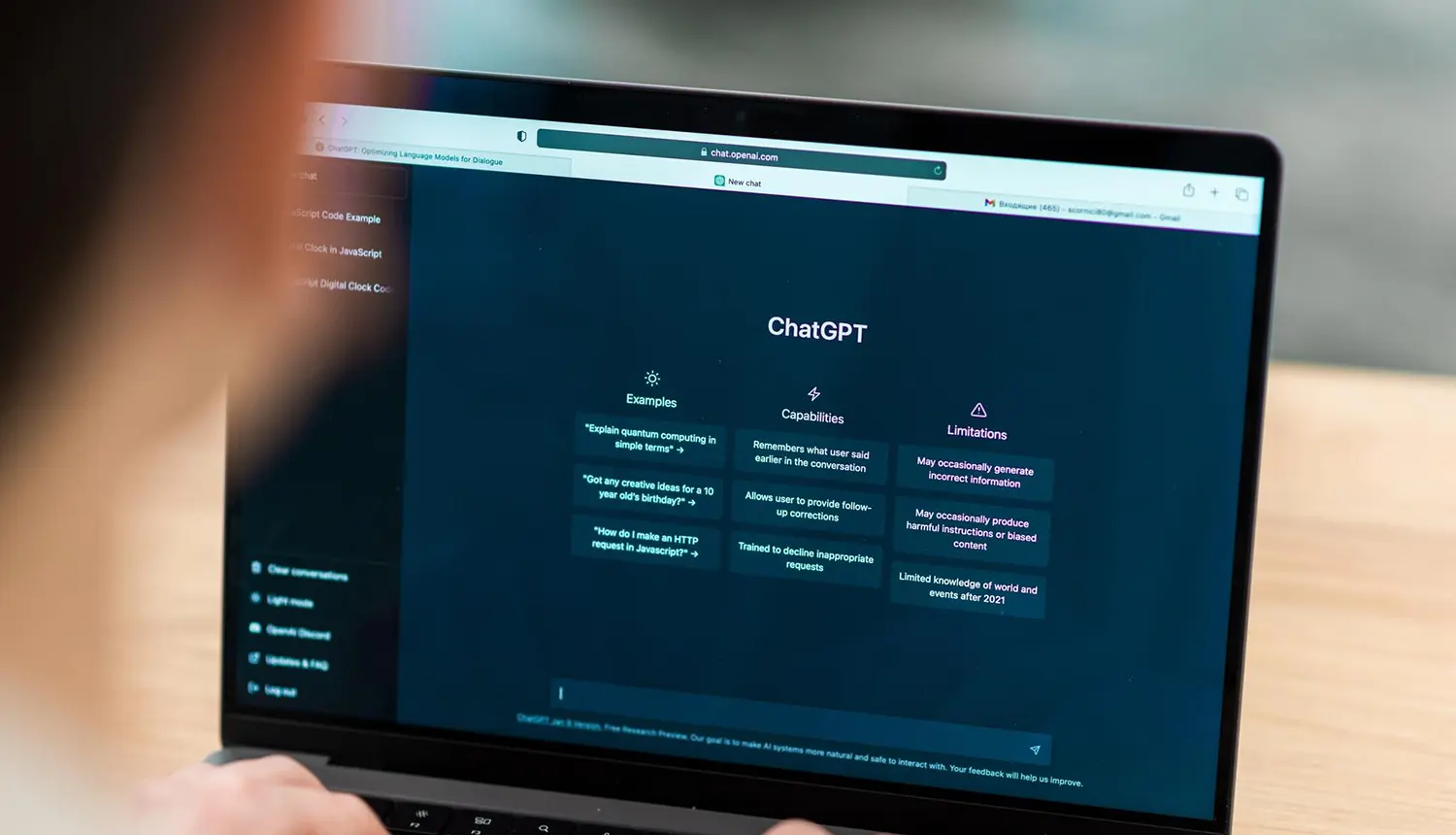

1. ChatGPT's Lightweight Deep Research Tool

- Purpose: Provides a cost-effective version of the deep research tool, delivering concise yet high-quality responses.

- Accessibility: Free users can perform up to five tasks monthly; paid tiers have higher limits.

- Default Activation: Automatically activates for paid users upon reaching usage limits of the original model.

2. Operator: Autonomous Web Interaction Agent

- Functionality: Navigates web pages, performs tasks like clicking and typing, and self-corrects errors.

- Integration: Combines GPT-4o's vision with reinforcement learning for GUI interactions.

- Availability: Currently for ChatGPT Pro users in the U.S., with plans to expand to other tiers.

3. Sora: Text-to-Video Generation Model

- Capabilities: Generates short video clips from text prompts and can extend existing videos.

- Release: Available to ChatGPT Plus and Pro users from December 2024.

- Significance: Marks its entry into the text-to-video generation space.

4. Integration of GPT-Image-1 into Design Tools

OpenAI's enhanced image generation model, "gpt-image-1," is now integrated into Adobe's Firefly and Express apps, as well as Figma's design platform.

- Features: Enables users to generate and edit images using text prompts within design platforms.

- API Access: Available through Images API, with support for the Responses API forthcoming.

5. Strategic Partnership with The Washington Post

Partnered with The Washington Post to incorporate summaries, excerpts, and links to the publication's original reporting within ChatGPT.

- Implementation: Displays summaries, excerpts, and links to The Post’s reporting in user queries.

- Broader Impact: Part of its initiative to integrate high-quality journalism into AI platforms.

Supercharge Your Business with BrainX Technologies' Custom AI Solutions

At BrainX Technologies, we don’t just follow AI trends but we engineer them. Our team specializes in developing futuristic Generative AI models, LLMs (Large Language Models), and conversational chatbots powered by advanced NLP (Natural Language Processing) and Transformer architectures.

Whether you need intelligent customer support agents, dynamic content generators, or AI-driven business automation, we deliver scalable, fine-tuned solutions that redefine engagement and efficiency.

Partner with BrainX Technologies because the future belongs to those who build it!